CoreAudio

CoreAudio提供了一套软件技术来方便开发者实现audio的一些特性,其中就包含了录制,播放,音效处理,定位,格式转换,文件流处理,同时包含:

* 可以在应用中使用内嵌的平衡器和混响器

* 自动获取音频的IO

* 将电话设备的信息应用到应用中

* 在不干扰音效的情况下减少或是延长电池寿命CoreAudio包含了C和OC的API,从而可以通过灵活的编程环境来保持一个低延迟音频信号链。

整个参考文档是很长的,Core Audio Essentials章节中主要讲述的是Core Audio的组成,以及每个组成可以做到的事情。

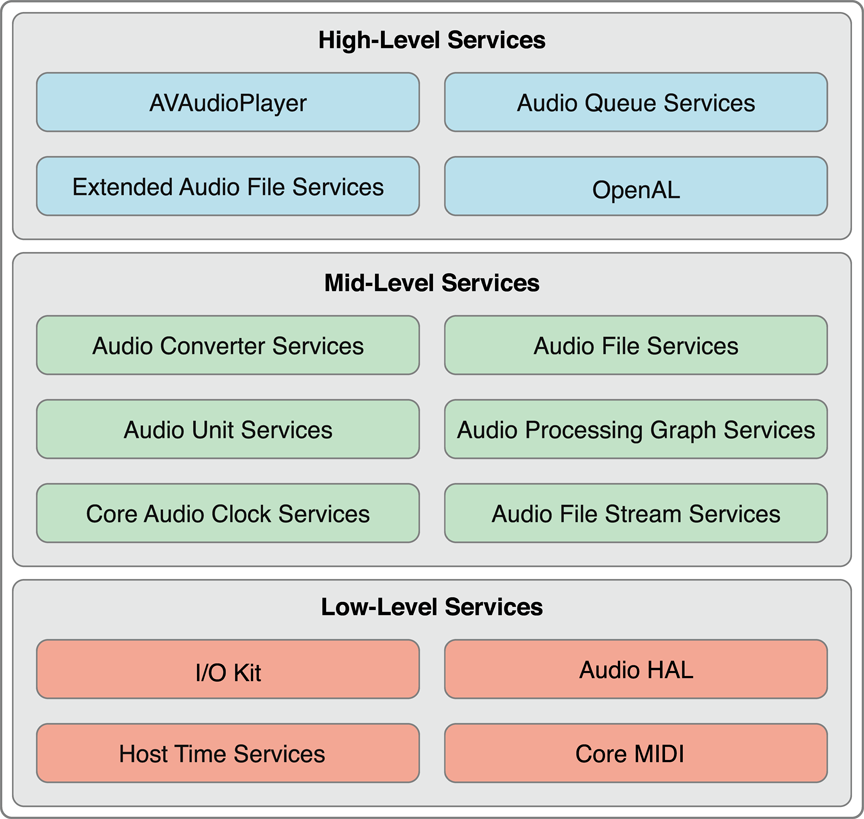

首先介绍API的结构:

顺带介绍每个层的每个api的功能,例如底层结构中:

- I/Okit主要适合驱动做交互

- Audio HAL(The audio hardware abstraction layer)提供一个与设备无关的,与驱动器无关的硬件接口。

- Core MIDI提供音乐流和设备的软件接口

- Host time Servies提供进入设备的权限

剩下的中层和顶层的接口,从英文定义上应该可以理解其对应的功能,如果不是很明白,可以参考文档Core Audio Essentials

下面讲解的是在使用相关功能的时候,需要引入的framework,这个是比较重要的,CoreAudio并不是仅仅引入CoreAudio.framework就可以使用所有的api的,为了功能化的考虑,苹果将Framework拆成了多个,在使用的时候,才需要引入,即便动态库在存储结构中只会存在一份。根据需要参考Core Audio Essentials

接下来就是说音频文件的播放设置了,在文档中你可以了解到,其实每个播放文件在生成的时候,就被赋予了一个ID,这个id就是这个播放对象的协议对象,每个播放流播放的都是这个id协议对象。系统层中也包含了一些协议id对象,所以在播放音频的时候,尽量使用一个自定义的id,一面播放的声音和给定的声音不一致。

在播放声音文件的时候,一般需要几个步骤:

- 设置播放文件的property

- 完成播放文件的回调

这两个是播放音频中必须知道的,一些常用的设置如:

struct AudioStreamBasicDescription {

mSampleRate = 44100.0;

mFormatID = kAudioFormatLinearPCM;

mFormatFlags = kAudioFormatFlagsAudioUnitCanonical;

mBytesPerPacket = 1 * sizeof (AudioUnitSampleType); // 8

mFramesPerPacket = 1;

mBytesPerFrame = 1 * sizeof (AudioUnitSampleType); // 8

mChannelsPerFrame = 2;

mBitsPerChannel = 8 * sizeof (AudioUnitSampleType); // 32

mReserved = 0;

};记住这些基础的设置还是相当的有用的。讲完了基本的播放设置,接下来讲解数据传输,这里数据传输包含了两个方面:

- magic cookie

- Packets

这两中数据流的传输,都是为了能有效的保护数据,解析的时候能够拿到指定size的文件播放。

传输中数据结构讲解完成了之后,就是传输完成之后的处理,或是容错环节,这个环节是相当重要的:

- 例如,中途播放声音的时候接入一个电话

- 例如中途播放声音的时候,来了一个系统声音

使用顶层的API audio session可以更好的处理这些中断操作。

接下来就是一下实例了,使用AVAudioPlayer播放文件,System Sound Servie播放声音,Recorder等等。但是这些都是有自己的独立章节去讲述的。

Audio Queue Servies

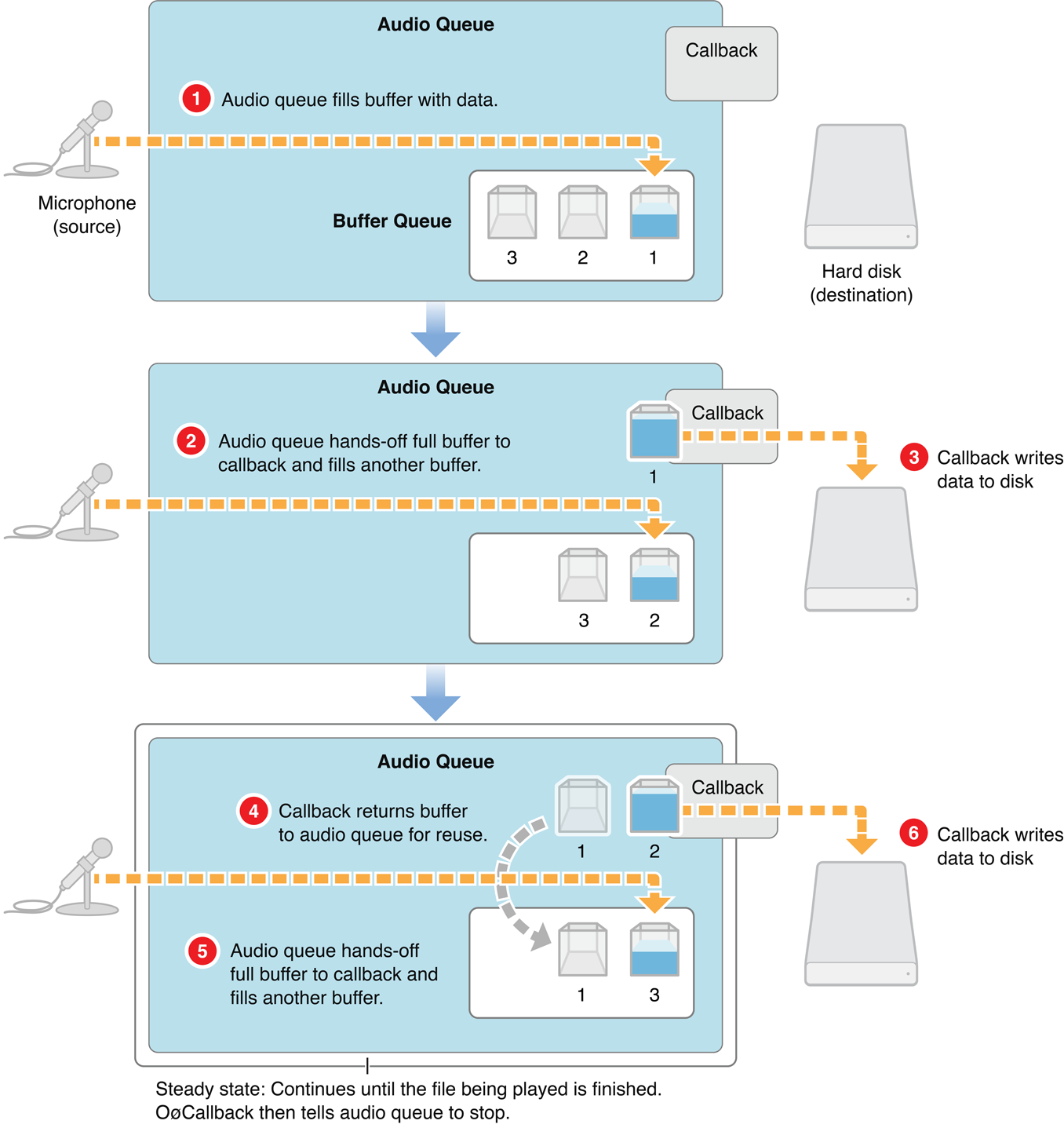

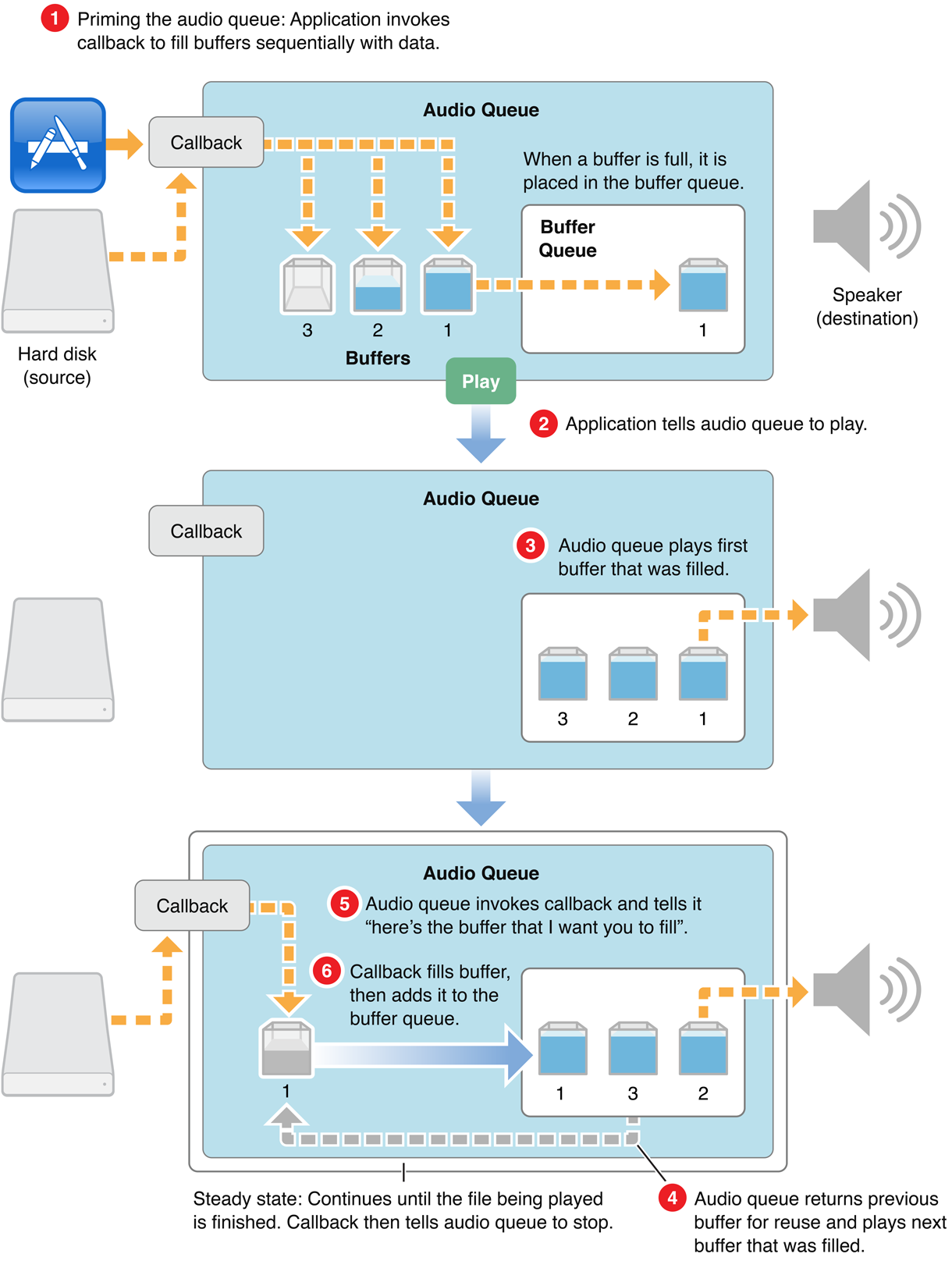

当你接触了网络流播放,必然要去了解Audio Queue Servies相关的信息,那么AudioQueue到底是做什么的?先看图

上图中描述的是录制声音的主要流程,流程中有三个缓冲buffer,当收到外界的数据来源的时候,先填充第一个buffer缓冲池,缓冲池填充满之后,就会触发callBack函数,处理完成了之后,将这个缓冲池放回队列中。这就形成了一个循环。

上图中的播放队列也是一样的逻辑。可以参考这个博客,写的非常的详细.

上面的博客中已经详细的介绍了相应对象的功能以及原理,看完了之后会获益良多:

- AudioStreamFile支持获取文件流信息,为文件播放做准备

- AudioFile也是同样的作用,但是在支持流媒体播放的时候需要AudioStreamFile的帮助,确定文件是否可读

- AudioQueueServie在拿到数据流之后,可以播放buffer中流数据

- AudioSessionServie可以检测音频播放终端的各种case,例如接入一个电话,完毕之后恢复播放

- AVAudioPlayer播放本地音乐,或是缓存了一段长度的流音乐

- AVAudioRecorder录制声音

- MPMusicPlayer播放系统库的音乐,顶层API,高度封装

这里也给出我自己写的一段AudioQueueServie的录制和播放本地文件的代码:

Recorder.h

#import <Foundation/Foundation.h>

@interface MMAudioCoreRecorder : NSObject

@property (nonatomic, readonly) CGFloat volume;

- (void)startRecording;

- (void)stopRecording;

@endRecorder.m

#import "MMAudioCoreRecorder.h"

#import <AVFoundation/AVFoundation.h>

#import "MMASBFormat.h"

static const int kNumberBuffers = 3;

typedef struct {

AudioStreamBasicDescription mDataFormat; // 2 文件格式描述

AudioQueueRef mQueue; // 3 The recording audio queue created by your application.

AudioQueueBufferRef mBuffers[kNumberBuffers]; // 4 An array holding pointers to the audio queue buffers managed by the audio queue.

AudioFileID mAudioFile; // 5 An audio file object representing the file into which your program records audio data.

UInt32 bufferByteSize; // 6 The size, in bytes, for each audio queue buffer. This value is calculated in these examples in the DeriveBufferSize function, after the audio queue is created and before it is started

SInt64 mCurrentPacket; // 7 The packet index for the first packet to be written from the current audio queue buffer.

bool mIsRunning; // 8 A Boolean value indicating whether or not the audio queue is running.

} AQRecorderState;

@interface MMAudioCoreRecorder (){

AQRecorderState recordState;

}

@property (nonatomic, strong) NSTimer *timer;

@property (nonatomic, readwrite) CGFloat volume;

@end

@implementation MMAudioCoreRecorder

static void HandleInputBuffer (void *aqData,

AudioQueueRef inAQ,

AudioQueueBufferRef inBuffer,

const AudioTimeStamp *inStartTime,

UInt32 inNumPackets,

const AudioStreamPacketDescription *inPacketDesc) {

AQRecorderState *pAqData = (AQRecorderState *) aqData;

if (inNumPackets == 0 &&

pAqData->mDataFormat.mBytesPerPacket != 0)

inNumPackets =

inBuffer->mAudioDataByteSize / pAqData->mDataFormat.mBytesPerPacket;

// 计算文件写入的标记位

if (AudioFileWritePackets (pAqData->mAudioFile,

false,

inBuffer->mAudioDataByteSize,

inPacketDesc,

pAqData->mCurrentPacket,

&inNumPackets,

inBuffer->mAudioData

) == noErr) {

pAqData->mCurrentPacket += inNumPackets;

}

if (pAqData->mIsRunning == 0) return;

// 使用完成将buffer回传

AudioQueueEnqueueBuffer (pAqData->mQueue,

inBuffer,

0,

NULL

);

}

// 计算每个buffer缓冲区域的最大的size

void DeriveBufferSize (AudioQueueRef audioQueue,

AudioStreamBasicDescription ASBDescription,

Float64 seconds,

UInt32 *outBufferSize) {

static const int maxBufferSize = 0x50000;

int maxPacketSize = ASBDescription.mBytesPerPacket;

if (maxPacketSize == 0) {

UInt32 maxVBRPacketSize = sizeof(maxPacketSize);

AudioQueueGetProperty (audioQueue,

kAudioQueueProperty_MaximumOutputPacketSize,

&maxPacketSize,

&maxVBRPacketSize);

}

Float64 numBytesForTime =

ASBDescription.mSampleRate * maxPacketSize * seconds;

*outBufferSize = (UInt32)(numBytesForTime < maxBufferSize ? numBytesForTime : maxBufferSize);

}

// 为文件设置magic cookie,这个常用与MPEG 4 ACC

OSStatus SetMagicCookieForFile (AudioQueueRef inQueue,

AudioFileID inFile) {

OSStatus result = noErr;

UInt32 cookieSize;

if (AudioQueueGetPropertySize (inQueue,

kAudioQueueProperty_MagicCookie,

&cookieSize) == noErr) {

char* magicCookie =(char *) malloc (cookieSize);

if (AudioQueueGetProperty (inQueue,

kAudioQueueProperty_MagicCookie,

magicCookie,

&cookieSize) == noErr)

result = AudioFileSetProperty (inFile,

kAudioFilePropertyMagicCookieData,

cookieSize,

magicCookie);

free (magicCookie);

}

return result;

}

- (void)dealloc {

[self.timer invalidate];

self.timer = nil;

}

// 初始化对象

- (instancetype)init

{

self = [super init];

if (self) {

[self initSelf];

}

return self;

}

// 初始化基础对象

- (void)initSelf {

[MMASBFormat configASBFormat:&recordState.mDataFormat];

[self createAudioSaveFile];

}

// 使用存在的ASB信息

- (void)existenseABSFormat {

UInt32 dataFormatSize = sizeof (recordState.mDataFormat);

AudioQueueGetProperty (recordState.mQueue,

kAudioQueueProperty_StreamDescription,

&recordState.mDataFormat,

&dataFormatSize);

}

// 创建文件路径

- (NSString *)getSavePath {

NSString *urlStr=[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject];

urlStr=[urlStr stringByAppendingPathComponent:@"tempAudioRecord.mp3"];

NSLog(@"文件录制的路径地址:%@",urlStr);

return urlStr;

}

// 创建文件路径,用来存储音频数据

- (void)createAudioSaveFile {

CFURLRef audioFileURL =

CFURLCreateFromFileSystemRepresentation (NULL,

(const UInt8 *)[[self getSavePath] UTF8String],

strlen ([[self getSavePath] UTF8String]),

false);

AudioFileCreateWithURL (audioFileURL,

kAudioFileAIFFType,

&recordState.mDataFormat,

kAudioFileFlags_EraseFile,

&recordState.mAudioFile);

CFRelease(audioFileURL);

}

- (NSTimer *)timer {

if (!_timer) {

_timer = [NSTimer scheduledTimerWithTimeInterval:0.02 block:^(NSTimer * _Nonnull timer) {

[self readVolume];

} repeats:YES];

}

return _timer;

}

- (void)readVolume {

}

- (void)startRecording {

recordState.mCurrentPacket = 0;

OSStatus status;

status = AudioQueueNewInput(&recordState.mDataFormat,

HandleInputBuffer,

&recordState,

CFRunLoopGetCurrent(),

kCFRunLoopCommonModes,

0,

&recordState.mQueue);

if (status == 0) {

DeriveBufferSize(recordState.mQueue, recordState.mDataFormat, 0.5, &recordState.bufferByteSize);

for (int i = 0; i < kNumberBuffers; i++) {

AudioQueueAllocateBuffer(recordState.mQueue, recordState.bufferByteSize, &recordState.mBuffers[i]);

AudioQueueEnqueueBuffer(recordState.mQueue, recordState.mBuffers[i], 0, nil);

}

recordState.mIsRunning = true;

status = AudioQueueStart(recordState.mQueue, NULL);

if (status == 0) {

NSLog(@"当前录制对象已经开始");

}

}

}

- (void)stopRecording {

NSLog(@"当前录制已经结束!!!");

recordState.mIsRunning = false;

AudioQueueStop(recordState.mQueue, true);

for (int i = 0; i < kNumberBuffers; i++) {

AudioQueueFreeBuffer(recordState.mQueue, recordState.mBuffers[i]);

}

AudioQueueDispose(recordState.mQueue, true);

AudioFileClose(recordState.mAudioFile);

}

@end对应的Player.h

#import <Foundation/Foundation.h>

@interface MMAudioCorePlayer : NSObject

@property (nonatomic, assign) CGFloat volume;

@property (nonatomic, assign) CGFloat progress;

- (void)play;

- (void)pause;

- (void)stop;

@endPlayer.m

#import "MMAudioCorePlayer.h"

#import <AVFoundation/AVFoundation.h>

#import "MMASBFormat.h"

static const int kNumberBuffers = 3; // 1

typedef struct {

AudioStreamBasicDescription mDataFormat; // 2

AudioQueueRef mQueue; // 3

AudioQueueBufferRef mBuffers[kNumberBuffers]; // 4

AudioFileID mAudioFile; // 5

UInt32 bufferByteSize; // 6

SInt64 mCurrentPacket; // 7

UInt32 mNumPacketsToRead; // 8

AudioStreamPacketDescription *mPacketDescs; // 9

bool mIsRunning; // 10

}AQPlayerState;

@interface MMAudioCorePlayer () {

AQPlayerState playerState;

}

@end

@implementation MMAudioCorePlayer

static void HandleOutputBuffer (void *aqData,

AudioQueueRef inAQ,

AudioQueueBufferRef inBuffer) {

AQPlayerState *pAqData = (AQPlayerState *) aqData;

if (pAqData->mIsRunning == 0) return;

UInt32 numBytesReadFromFile;

UInt32 numPackets = pAqData->mNumPacketsToRead;

AudioFileReadPacketData (pAqData->mAudioFile,

false,

&numBytesReadFromFile,

pAqData->mPacketDescs,

pAqData->mCurrentPacket,

&numPackets,

inBuffer->mAudioData);

if (numPackets > 0) {

inBuffer->mAudioDataByteSize = numBytesReadFromFile;

AudioQueueEnqueueBuffer (pAqData->mQueue,

inBuffer,

(pAqData->mPacketDescs ? numPackets : 0),

pAqData->mPacketDescs);

pAqData->mCurrentPacket += numPackets;

} else {

AudioQueueStop (pAqData->mQueue,

false);

pAqData->mIsRunning = false;

}

}

void DeriveBufferSize (AudioStreamBasicDescription ASBDesc,

UInt32 maxPacketSize,

Float64 seconds,

UInt32 *outBufferSize,

UInt32 *outNumPacketsToRead) {

static const int maxBufferSize = 0x50000;

static const int minBufferSize = 0x4000;

if (ASBDesc.mFramesPerPacket != 0) {

Float64 numPacketsForTime =

ASBDesc.mSampleRate / ASBDesc.mFramesPerPacket * seconds;

*outBufferSize = numPacketsForTime * maxPacketSize;

} else {

*outBufferSize =

maxBufferSize > maxPacketSize ?

maxBufferSize : maxPacketSize;

}

if (*outBufferSize > maxBufferSize &&

*outBufferSize > maxPacketSize)

*outBufferSize = maxBufferSize;

else {

if (*outBufferSize < minBufferSize)

*outBufferSize = minBufferSize;

}

*outNumPacketsToRead = *outBufferSize / maxPacketSize;

}

- (instancetype)init

{

self = [super init];

if (self) {

[self initSelf];

}

return self;

}

- (void)initSelf {

[MMASBFormat configASBFormat:&playerState.mDataFormat];

[self openAudioFileForPlayback];

[self settingPlaybackAudioQueueBufferSizeAndNumberOfPacketsToRead];

}

#pragma mark - Property setter

- (void)setVolume:(CGFloat)volume {

_volume = volume;

if ([self prepareForPlay]) {

if (AudioQueueSetParameter(playerState.mQueue, kAudioQueueParam_Volume, volume) == 0) {

NSLog(@"设置音效成功!");

}

}

}

// 创建文件路径

- (NSString *)getSavePath {

NSString *urlStr=[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject];

urlStr=[urlStr stringByAppendingPathComponent:@"tempAudioRecord.mp3"];

return urlStr;

}

- (void)openAudioFileForPlayback {

CFURLRef audioFileURL =

CFURLCreateFromFileSystemRepresentation (NULL,

(const UInt8 *)[[self getSavePath] UTF8String],

strlen ([[self getSavePath] UTF8String]),

false);

OSStatus result = AudioFileOpenURL (audioFileURL,

kAudioFileReadPermission,

0,

&playerState.mAudioFile

);

if (result == 0) {

NSLog(@"文件读取成功!!!可以准备播放了");

}

CFRelease (audioFileURL);

}

- (void)settingPlaybackAudioQueueBufferSizeAndNumberOfPacketsToRead {

UInt32 maxPacketSize;

UInt32 propertySize = sizeof (maxPacketSize);

AudioFileGetProperty (playerState.mAudioFile,

kAudioFilePropertyPacketSizeUpperBound,

&propertySize,

&maxPacketSize);

DeriveBufferSize (playerState.mDataFormat,

maxPacketSize,

0.5,

&playerState.bufferByteSize,

&playerState.mNumPacketsToRead);

}

- (BOOL)prepareForPlay {

OSStatus status;

status = AudioQueueNewOutput(&playerState.mDataFormat,

HandleOutputBuffer,

&playerState,

CFRunLoopGetCurrent(),

kCFRunLoopCommonModes,

0,

&playerState.mQueue);

if (status == 0) return YES;

return NO;

}

- (void)play {

playerState.mCurrentPacket = true;

if ([self prepareForPlay]) {

for (int i = 0; i < kNumberBuffers; ++i) {

AudioQueueAllocateBuffer (playerState.mQueue,

playerState.bufferByteSize,

&playerState.mBuffers[i]);

HandleOutputBuffer (&playerState,

playerState.mQueue,

playerState.mBuffers[i]);

}

playerState.mIsRunning = true;

OSStatus status = AudioQueueStart(playerState.mQueue, NULL);

if (status == 0) {

NSLog(@"当前录制对象已经开始");

}

}

}

- (void)stop {

playerState.mIsRunning = false;

AudioQueueStop(playerState.mQueue, true);

for (int i = 0; i < kNumberBuffers; i++) {

AudioQueueFreeBuffer(playerState.mQueue, playerState.mBuffers[i]);

}

AudioQueueDispose(playerState.mQueue, true);

AudioFileClose(playerState.mAudioFile);

}

- (void)pause {

AudioQueuePause(playerState.mQueue);

}

@end上面的代码存在不完善的地方,持续更新中。

##参考:

Audio Queue Services Programming Guide